In order to assist in the detection of potentially harmful content, such as spam and malicious emails in Gmail, Google has unveiled a new multilingual text vectorizer called RETVec, short for Resilient and Efficient Text Vectorizer.

In accordance with the project description on GitHub, “RETVec is trained to be resilient against character-level manipulations including insertion, deletion, typos, homoglyphs, LEET substitution, and more.”

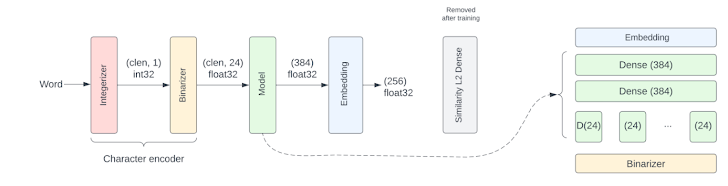

“The RETVec model is trained on top of a novel character encoder which can encode all UTF-8 characters and words efficiently.”

Threat actors are known to create counterstrategies to get around these security measures, even though major platforms like Gmail and YouTube rely on text classification models to identify phishing attacks, inappropriate comments, and scams.

and invisible characters, have been observed being used by them.

With its out-of-the-box support for over 100 languages, RETVec aims to contribute to the development of more robust and efficient server-side and on-device text classifiers.

In natural language processing (NLP), vectorization is a technique that maps words or phrases from a vocabulary to a corresponding numerical representation for use in sentiment analysis, text classification, and named entity recognition, among other analyses.

“Due to its novel architecture, RETVec works out-of-the-box on every language and all UTF-8 characters without the need for text preprocessing, making it the ideal candidate for on-device, web, and large-scale text classification deployments,” said Marina Zhang and Elie Bursztein of Google.

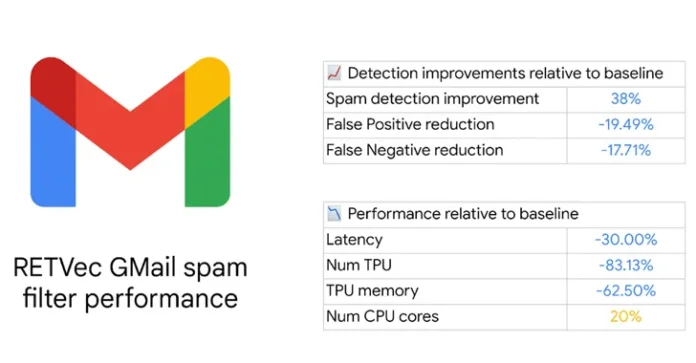

According to the tech giant, the vectorizer’s integration with Gmail increased the rate of spam detection by 38% compared to the baseline and decreased the rate of false positives by 19.4%. Additionally, it reduced the model’s Tensor Processing Unit (TPU) usage by 83%.

“The compact representation of RETVec allows for faster inference speed in models trained with it. Bursztein and Zhang noted that “having smaller models lowers latency and computational costs, which is critical for large-scale applications and on-device models.”